AI in space is not just for government officials or the military; it’s available to anyone looking to access it. At SkyWatch, we believe in simplicity. We’ve taken a very challenging data engineering problem – obtaining, processing, and standardizing satellite data – and built a platform that abstracts the massive amount of complexity required to deliver data from space.

Artificial intelligence is something we get asked about a lot. People ask us if we are building machine learning and computer vision algorithms to count cars or buildings or detect how much oil is in a floating top drum. There are many use cases to explore, and lots of innovation is happening in this area that will significantly lower costs for many industries.

The answer we give them is that we have lots of customers who do these exciting, cutting-edge things. As we want to stay laser-focused on the problem we are trying to solve – aggregating the world’s Earth observation satellite data – we tend to focus our AI and machine learning efforts on pragmatic AI within our systems. These simple (in concept), value-driven functions aim to remove humans from decision-making and optimize data delivery from space. We’ve built computer vision models to determine image quality and cloud classification, allowing our customers to receive data without humans in the loop. We also believe there is a huge opportunity to optimize space assets by orchestrating demand to the most efficient collection vehicles.

On June 1, 2020, I was lucky enough to participate in the AIxSpace webinar, exploring how AI is used in various space-related industries. Here are some of the questions that were asked and my thoughts on each topic:

What are real case examples (today) of AI applied in the space environment?

We believe that AI can function within many of our operations instead of being the center of our operations. While many companies focus on the “last mile,” we focus on removing humans from the demand and fulfillment pipelines.

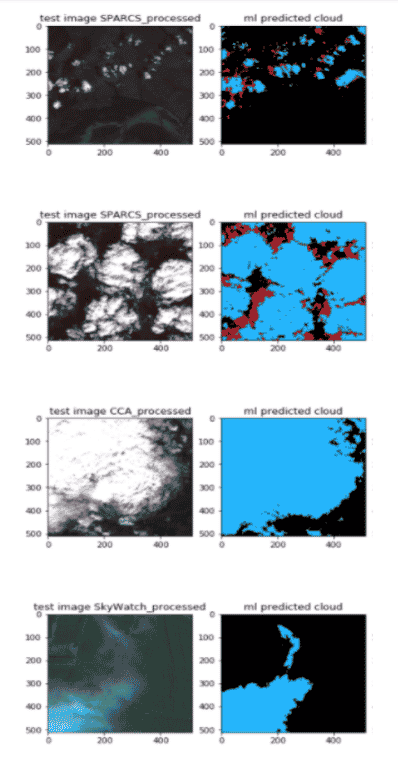

In terms of examples – we use a deep learning model based on a convolutional neural network (CNN) – which works well at classifying edges – to predict clouds and haze on multiple data sources. Some key issues we thought about when developing this model:

- Any Earth observation product must detect clouds since 70% of the earth is covered in clouds daily.

- The satellite operators view cloud masking as a cost of doing business, a non-value-added service.

- Cloud prediction models are being optimized for specific data sources in the industry.

- Models that work on low, medium, and high-resolution data with different band combinations can be challenging to develop.

- Developing a model that can handle the massive size of high-resolution images is very challenging. The majority of published models use smaller, open-data images.

- Additionally, we had to develop a method of predicting clouds in non-georeferenced preview images in archive catalogues.

Currently, our model is showing 90%+ accuracy, which has been great. There are many corner cases to optimize for, and we’ll continue to work on this model in the future.

Side-by-side comparison images showing results of the SkyWatch EarthCache™ machine-learning cloud detection model. Earth observation data images, including clouds, are on the left, and the cloud mask extracted for each image is shown on the right.

A CNN-based image quality prediction model was also built to determine if an image will be useful to our customers if it contains problems. In the past, humans have provided Quality Assurance (QA) on EO data delivery, but there is no human QA in a machine-to-machine environment. Some things we discussed during the development of this model included:

- We must ensure high-quality results in an autonomous environment of demand-driving Earth observation collections and near real-time delivery.

- The world is made up of many landscapes, which makes it very challenging to define a “high quality” result in Earth observation.

- A sensor or processing anomaly should be the first thing to be investigated.

Our current image quality prediction model has shown it can reduce the amount of clearly faulty data that reaches our customers. We’ll be adding more and more components to this model and iterating on its performance in the coming months.

Where do you see the greatest upside opportunity or largest impact area for AI in space?

We believe that optimizing space assets, both within a single constellation and multiple constellations, offer significant potential. For organizations like the Canadian Space Agency, collecting data via RCM may be unnecessary if comparable products are offered by other SAR satellites, such as Sentinel-1 or commercial SAR providers. By predicting alternate collections, millions of dollars can be saved. SkyWatch sits in the value chain to make it easy for participants to access and aggregate this data.

In addition to radiometric calibration deviations between satellites and varying collection angles, multi-source coregistration has been extremely challenging to deliver. In the future, with more input variables and as computer vision evolves, this type of processing will become more feasible.

Another large opportunity is onboard cloud detection and other processing, which require highly efficient, low-power models but will save downlink costs and allow rapid rescheduling.

A very well-discussed use case is object/change detection.

Satellites images showing the changes to Panama City Beach in 2018 before and after Hurricane Michael. Several buildings are destroyed and roofs are visibly damaged. Images were taken by SkySat satellites from Planet (80 cm resolution).

AI and space are two very broad areas. Where do they converge and diverge? What can the space industry learn from early adopters of AI?

We consider AI to be most impactful when its implementation is practical. Investigating one’s existing activities and operations can elucidate an abundance of chances to apply judgment in terms of predicting the utilization of compute resources, gauging the best time to carry out work within a given region, or estimating a customer’s likelihood to approve that the output is of superb grade. We are confident that this “bottom-up” approach is more viable than a “top-down” strategy as far as AI projects are concerned; without a suitable use case, any AI-based solution will fail.

How will the Covid-19 pandemic impact the adoption of AI in space?

Interest in Earth Observation (EO) had skyrocketed since the pandemic started, as it did when everyone was confined to lockdown. For example, a customer who verifies organic farms couldn’t have personnel visit to assess compliance and instead resorted to remote sensing data. Smart cities, and oil and gas pipeline monitoring, are among the many other applications where EO data is being adopted faster during this crisis, with some companies even experiencing cost savings.

What are the major bottlenecks to transition from change detection to predictive analytics?

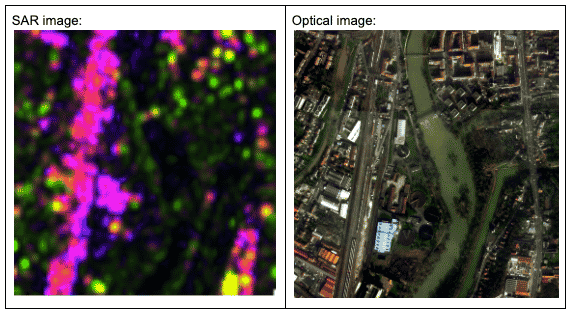

Once change detection is established, further refining can occur to predict the game-changing alteration. An illustration is a customer who constructed an app monitoring oil and gas pipelines. The data from Sentinel-1 SAR was accumulated to observe any happenings near and within the boundary of the pipeline, subsequently requesting higher resolution optical imagery only at noted areas. This cost-effective method allows them to enhance their detection process and generate before and after images for the focused points. Even though humans are more proficient than models, that fact is quickly changing. With the exclusion of more incorrect positives, operators will be left with just a few premier changes worth inspecting with boots on the ground.

A side-by-side comparison of the same area imaged by Sentinel-1 (left), a Synthetic Aperture Radar satellite, and SkySat Planet (right), a high resolution satellite constellation (80 cm at time of taking, now 50 cm).

Any AI project needs to demonstrate ROI. Value is usually derived from replacing manual decision points in a system or process; it’s almost inconceivable now, but humans used to route telephone calls manually. However, the accuracy of these automated decisions is critical to gaining value – if computer vision were to incorrectly identify a tree species more than 20% of the time, it could have a massive effect on the downstream cost of that lumber. The degree of tolerance for incorrect predictions diverges depending on the application, yet the cost of mispredictions may be significant enough to block adoption.

As we have partnered with the Canadian Space Agency on prototypes in this area, we will continue to invest in pragmatic AI. The space industry has a tremendous opportunity to leverage artificial intelligence and machine learning. Our goal is to make our customers look like heroes, so we look forward to working with other companies utilizing the EarthCache™ API to skip the massive amount of data preparation required and focus on their models.